Industry's demand for flexible embedded solutions providing high performance and short time-to-market has led to the development of configurable and extensible processors. These pre-verified application-specific processors (ASIP) build on proven baseline cores while allowing for some degree of customisation through user-defined instruction set extensions (ISEs) implemented as functional units in an extended microarchitecture.

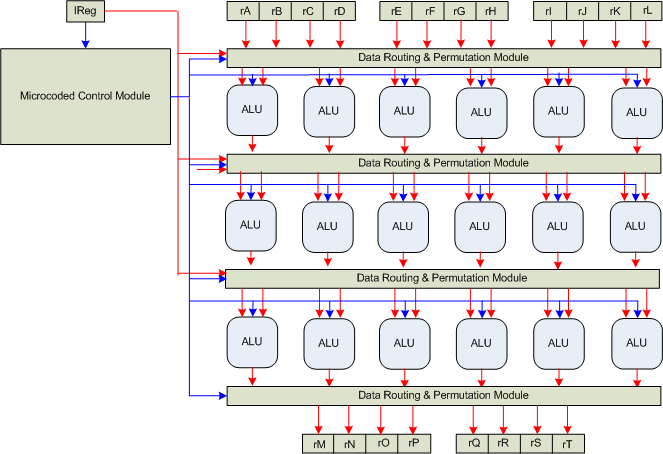

Figure 1. Very generalised CFA, illustrating the Verilog modules and connection in the microarchitecture. The red arrows indicate the data plane, and the blue indicate the control plane. Boxes labelled rA-rL are input registers, grouped into the 4-element vectors used in the EnCore extension interface. Boxes labelled rM-rT are output registers, again grouped as per the EnCore. ALU are single-function; although control exists to select sub-functions such as operand type, each ALU node performs only a single arithmetic operation.

The CFA is a specific microarchitectural construction similar to the CCA, but with some critical differences. The term CFA has been coined to distinguish this work's contribution from that of Mahlke, Clark, et al. [1] [2] [3] concerned with the CCA. Figure 1 illustrates the microarchitecture of a single, very generalised CFA. As with CCAs, multiple CFAs may be utilised in a single processor data-path in order to better cover acceleration opportunities. CFAs do not include dynamic translation hardware [4], preferring to allow for a reconfigurable ISA accessible at the architectural level. The baseline instruction stream is used by CCA to extract complex operations dynamically. The instruction stream, however, provides a lowered and hence less optimal representation for complex operation extraction, than higher-level compiler intermediate representations [5]. In place of the CCA LUT, the CFA has a similar construction called the ``Microcoded Control Unit'' (MCU). The MCU is mutable on the fly using special instructions to move horizontal microcode from memory into the MCU. In this way the MCU may be initialised with microcode embedded in the executable binary, inserted statically at compile time.

CFAs by their very programmable nature implicitly share resources between ISEs; a single CFA is intended to cover many different extension instructions. Configuration memory in the MCU selects the routing and permutation required at each stage in the CFA pipeline. CFAs are designed to allow for exploiting temporal parallelism as well as spatial: CFAs are pipelined to allow single-cycle initiation interval, whereas CCAs are combinational and have a multi-cycle issue latency.

CFA can include a data memory, which is essentially a CCM. CCA would not be able to automatically derive which elements should be stored in a CCM without some degree of lookahead, which is not included in their design. Moreover, control flow in the instruction stream would most likely thwart attempts to accurately place values in either register file or CCM. Multiple data can be read or written in a single cycle, in order to supplement the elements input through the registers (rA-rL in Figure 1). The number of I/O ports and the size of this data memory is subject to performance requirements and constraints.

CFAs are constructed by a greedy algorithm similar to the greedy algorithm used to solve the knapsack problem. Modifications are made because the ``cost'' of two ISEs merged into a single CFA is not additive due to resource sharing, as illustrated in Algorithm 1.

- Instantiate an empty list L of the CFAs to be included in the final hardware model.

- The list of ISEs is converted to CFA Object Models, and ordered descending by their impact on application acceleration (merit), to produce the list S of candidate single-ISE CFAs.

- If a limit is imposed on the maximum number N of ISEs, the list S is reduced to only the top N by merit.

- Iterate through the list S in order; for each CFA C in S:

- Merge C with all CFAs in L to create a new, list M.

- If no CFAs yet exist in L and if C meets area constraints, add C to L and move to next C in I.

- Remove any CFAs in M which has area (including sum of CFA in L) greater than the area constraint, or a permutation and routing module with input width greater than the width constraint.

- If M is empty, all merged CFAs either broke area or input width constraint, so add C to L.

- If M is not empty, Locate the merged CFA in M with the least area increase from merging with C. Add this back into L, replacing the original CFA merged to create the new CFA.

- Take the now-populated list L and emit each CFA Object Model as a Verilog structural model with associated synthesis flow scripts and test-bench.

Algorithm 1. CFA Construction Algorithm, used to convert ISEs as DFGs into a CFA model under area and latency (represented as multiplexor input width) constraints, and select a good combination for implementation. As implemented in the uarchgen tool.

The width constraint of Algorithm 1 must be set to ensure that the latencies of the permutation and routing modules (see Figure 1) do not have excessive latency (and hence require further pipelining). In practice this limits the size of individual CFAs, but if a single CFA breaks the input width multiple CFA will be generated instead. Throughout this thesis, the width constraint is set to 38, as this has been found to perform well with the 130nm standard cell libraries used. Without this limit, the CFA latencies for ISEs can wander outwith those prescribed by the problem definition, due to excessive multiplexing delay.

Algorithm 1 and the CFA structure are related, as the echelon structure of the CFA allows for the resource-sharing (CFA merging) used in the DSE process to operate in a very simple fashion: Where an asynchronous design would have to deal with real-numbered latencies during resource sharing, when two CFAs are merged all that needs to happen is iteration through the echelons of one CFA adding ALU from the other where these are not already present. The runtime of the CFA ``Merging'' process is therefore very low; linear in the number of ALU in the largest of the two CFAs merged. When constructing a single-ISE CFA such as before merging in Algorithm 1, there is a directly implied assignment of ALU operand source and sink. ALU source operands are mapped to the first echelon where all inputs are available. An ALU sink operand is mapped to the echelon existing at the cycle resulting from adding the source cycle to the number of cycles (integer) that the ALU takes to produce a result. Prior to CFA construction all ALU types which are to be used (e.g. Add, Multiply, etc) are taken from DesignWare and synthesised. Pipeline registers are added to ALU where necessary and timed to preserve the clock frequency desired. Input and output delays are included in ALU module synthesis constraints to allow for multiplexing time. Each ALU hardware latency (in cycles) is therefore known in advance. This preparation allows the CFA construction to operate in the knowledge that the design will close timing, removing the need for feedback from CFA DesignCompiler synthesis. The I/O delay allowance generally increases the area of each ALU by a little to produce a faster implementation, but is necessary to close timing in the face of multiplexing delay. Having pipeline registers pre-balanced for the clock frequency required removes the need for further re-timing when the CFA is synthesised, and so makes DesignCompiler synthesis faster; fast synthesis is a desirable property when attempting to perform DSE.

-

A. Hormati, N. Clark, and S. Mahlke.

Exploiting narrow accelerators with data-centric subgraph mapping. In Proc. 2007 International Symposium on Code Generation and Optimization (CGO), 2007. -

N. Clark, A. Hormati, S. Mahlke, and S. Yehia.

Scalable subgraph mapping for acyclic computation accelerators. In Proc. 2006 Intl. Conference on Compilers, Architecture, and Synthesis for Embedded Systems (CASES), 2006. -

S. Yehia, N. Clark, S. Mahlke, and K. Flautner.

Exploring the design space of lut-based transparent accelerators. In Proc. 2005 Intl. Conference on Compilers, Architecture, and Synthesis for Embedded Systems (CASES), 2005. -

N. Clark, M. Kudlur, H. Park, S. Mahlke, and K. Flautner.

Application specific processing on a general purpose core via transparent instruction set customization. In Proc. 37th Intl. Symposium on Microarchitecture (MICRO), 2004. -

Christian Laetsch.

A multi-layer intermediate representation for ASIP design. Master's thesis, EPFL, Lausanne, Switzerland, September 2003.