The automation of ISE exploration has been actively studied in recent years, leading to a number of algorithms e.g. [1] [2] [3] [4] which derive the partitioning of hardware and software under microarchitectural constraints. Work is still being performed in defining the full space of exploration even in purely arithmetic ISA design [5]. Work to include better models in tools has allowed for better decisions about the performance and feasibility of extensions [6], but further work is required.

Most current approaches to automated ISE incorporate two phases:

- Identification; whereby formalisations of basic blocks are analysed to produce ISEs in the form of DFG. Current algorithms use a combination of register file ports, bandwidth to other on-chip-memories, number and size of operations to constrain this problem, effectively a search technique. In order to guide the search, an abstraction of the speed of such operators in hardware is used to deduce the delay of the operation in software (the sum of all its nodes) and in hardware (the sum of the nodes in the critical path).

- Selection; whereby the identified ISEs of the previous phase are organised in terms of their performance, via Pareto-optimal ordering [7], greedy selection of the as many best performing templates as will fit in the coding space, or a knapsack formulation based on cost-benefit.

Algorithms differ in their optimality and runtime generally by trading off greed for speed. Some approaches like the Tensilica XPRES [8] compiler will identify a massive number of non-orthogonal ISEs in order to provide a large degree of freedom in the selection. On the other hand, algorithms like the Atasu [3] and ISEGEN [2] formulations focus on modelling some aspect of the microarchitecture (most often delay), and then greedily identify the largest and best performing template for each basic block. This process is then repeated for the remainder of the basic block. This is an example of the trade-off between greed and speed, as it provides space reduction in both the identification and selection phases. In addition, selection phases have the benefit of orthogonal templates; the performance may simply be summed over the set selected.

This approach is somewhat flawed in the face of wire-delays, and in larger templates the algorithms will become less and less accurate at predicting the actual delay of an ISE. This flaw is as a product of the automated ISE algorithms currently blindly targeting ASIC implementation of the resulting extensions, a process which is wildly affected by a number of design concerns outwith the control or speculation of current automated ISE algorithms. The mapping of ISEs to an existing CFA would permit far more accurate analysis of the delays inherent in mapping a particular extension. This is because the hardware is already realised and may be modelled in terms of its actual, empirically determined performance.

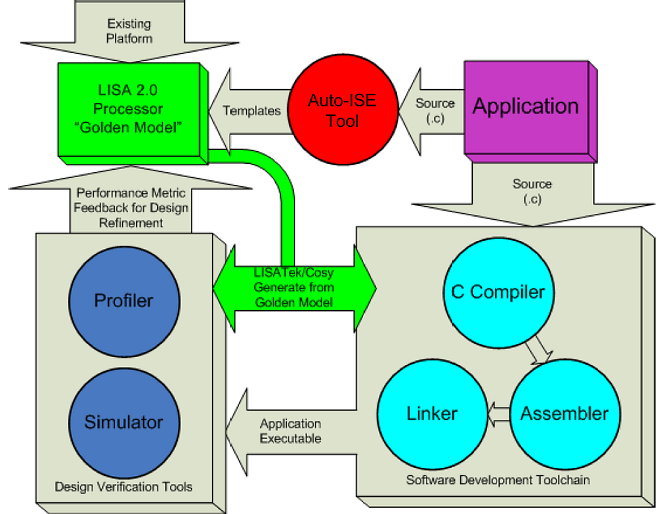

The exploration approach of using a range of tools, iteratively operating on a canonical system-level ADL is described as ``Compiler-in-Loop Design-Space Exploration'' [9]. It was originally motivated [10] through the discovery that iterative and methodical exploration of ASIP design is beneficial in decreasing time-to-market. CoSy [11] and LISATek [12] tools feature in many such frameworks; Figure 1 illustrates such a combination.

- Armita Peymandoust, Laura Pozzi, Paolo Ienne, and Giovanni De Micheli.

Automatic instruction set extension and utilisation for embedded processors. In Proceedings of the 14th International Conference on Application-specific Systems, Architectures and Processors, The Hague, The Netherlands., 2003. - Biswas, Sundarshan Banerjee, Nikil D. Dutt, Laura Pozzi, and Paolo

Ienne.

Isegen: An iterative improvement-based ise generation technique for fast customization of processors. IEEE Transactions on VLSI, 14(7), 2006. - Kubilay Atasu, Gunhan Dundar, and Can Ozturan.

An integer linear programming approach for identifying instruction-set extensions, 2005. - Laura Pozzi, Kubilay Atasu, and Paolo Ienne.

Exact and approximate algorithms for the extension of embedded processor instruction sets. IEEE Transactions on Computer- Aided Design of Integrated Circuits and Systems, 2006. - Ajay K. Verma and Paolo Ienne.

Towards the automatic exploration of arithmetic circuit architectures. In In Proceedings of the 43rd Design Automation Conference, San Francisco, California, 2006. - Laura Pozzi and Paolo Ienne.

Exploiting pipelining to relax register-file port constraints of instruction-set extensions. In In Proceedings of the International Conference on Compilers, Architectures, and Synthesis for Embedded Systems, San Francisco, Calif, 2005. - Tony Givargis, Frank Vahid, and Jorg Henkel.

System-level exploration for pareto-optimal configurations in parameterized systems-on-a-chip. In ICCAD, 2001. - Tensilica Inc. The xpres compiler: Triple-threat solution to code performance challenges. Tensilica Inc Whitepaper, 2005.

- M. Hohenauer, H. Scharwaechter, K. Karuri, O. Wahlen, T. Kogel, R. Leupers,

G. Ascheid, and H. Meyr.

Compiler-in-loop architecture exploration for efficient application specific embedded processor design, 2004. - T. Glokler, A. Hoffmann, and H. Meyr.

Methodical low-power asip design space exploration. VLSI Signal Processing, 33, 2003. - ACE CoSy Website - http://www.ace.nl/compiler/cosy.html.

- CoWare LISATek Datasheet - http://www.coware.com/PDF/products/LISATek.pdf.