Self-Structuring AutoEncoders

Combining Structure and Semantics for efficient Representation Learning

The principle of composition states the meaning of a whole comes from its parts and how they fit together. This is a really efficient principle because all you need to know is the meaning of smaller set of parts and rules for combining them in order to understand a near infinite set of higher order objects. It allows humans to understand the meanings of new words, sentences, and even entire books without having to memorize them. Machine learning models aren’t tasked with learning compositional structure explicitly, but what happens when they are? Does this help us create more efficient learners? In this blog we introduce and architecture, the Self-Structuring AutoEncoder (Self-StrAE) and its extension Banyan that do exactly that, and as a result are able to learn semantic representations in a radically more efficient manner. Requiring both very little data and parameter scale while remaining competitive with much bigger and more resource intensive models.

Self-Structuring AutoEncoders

Relevant Papers: EMNLP 2023 SemEval 2024

Model

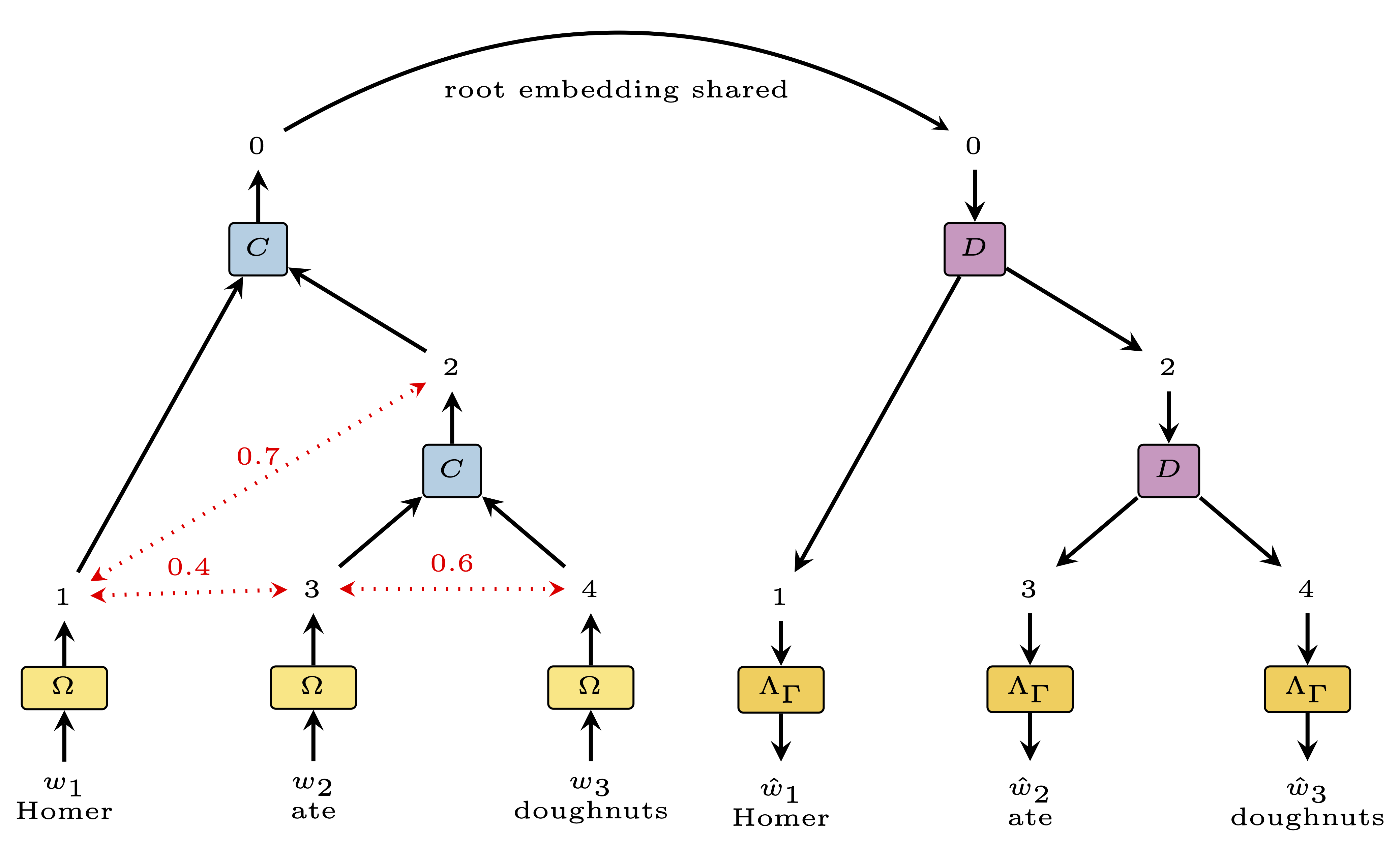

Self-StrAE is conceptually a fairly simple model. If we look at the figure above we can understand the forward pass pretty easily. We start with a set of tokens (words or subwords in a sentence) and then perform the following steps:

- Embedding: We embed our into a continuous space. This is done using a standard embedding layer, which maps each token to a dense vector representation.

- Compute Similarities: The next step is to take the cosine similarity between all adjacent tokens. This tells us how much they relate to each other (or how well they fit together if you like). We find the max and then decide to merge them. In the figure above that would be between ‘ate’ and ‘doughnuts’ initially.

- Merge: We merge the representations of the two tokens into a single representation that captures their composition. We do this by using a composition function. This is simply a function that takes two vectors as input and returns a single vector as output.

- Repeat: We repeat the process until we have a single representation for the entire input sequence. In our example that means that we now merge ‘Homer’ with our new representation of ‘ate doughnuts’.

- Decompose: Now we have a single vector that represents the entire input sequence, and if we look at the merge history we can also see that it defines a tree over the input. This lets us try and reconstruct the original embeddings in an ordered fashion. To do this we use a decomposition function which takes a single vector as input and returns two vectors (left and right child representations) as the output. We can repeatedly apply this function going from root to leaves until we have a reconstruction of the whole input and its intermediate parts.

For the first version of Self-StrAE we used the contrastive loss as our objective function. This means that we asked the representations for each node in the encoder to be as close as possible to the reconstructed representation of the same node in the decoder, and vice versa, while remaining distinct from all other node representations.

And that’s it, fairly simple right? However, it turns out that this simple process actually leads to some pretty interesting results. Lets consider the learning process. Intuitively, the model starts from random embeddings, and therefore an essentially random merge order. Throughout training, tokens which are often part of the same merges will have their representations drawn together, so the representation reflects what they are likely to compose with. The model can then leverage any regularities to better perform reconstruction. This leads the representations to further reflect likely compositions and consequently increases the regularity in the structure. Ultimately, this leads to representations which must, by virtue of the training procedure, reflect the compositional semantics learned by the model.

Results

| Model | Simlex | Wordsim S | Wordsim R | STS 12 | STS 16 | STS B | SICK R |

|---|---|---|---|---|---|---|---|

| Self-StrAE | 13.04 | 48.19 | 45.47 | 34.42 | 49.93 | 36.68 | 51 |

| Fasttext | 25.8 | 50.8 | 29.18 | 4.35 | 32.43 | 16.93 | 41.58 |

| Bi-LSTM | 12.88 | 39.46 | 32.22 | 9.22 | 33.78 | 14.06 | 40.36 |

| RoBERTa | 9.92 | 26.6 | 6.2 | 29.48 | 50.88 | 38.36 | 49.58 |

We compared Self-StrAE with a few unstructured baselines to see how it performed. We pre-trained all the models from scratch on about 10 million tokens of English Wikipedia, to assess data efficiency, and then evaluated them on a few standard semantic similarity tasks that cover both the word and the sentence levels. We can see that Self-StrAE not only performs really competively, but is able to transfer its performance from the word level (Simlex + Wordsim) seamlessly to the sentence level (STS + SICK). This is because it can take advantage of composition to generalise to different levels of hierarchy, and is a unique capability that our inductive bias unlocks. The other important point is that Self-StrAE is a really simple model, it just has an embedding matrix and two functions for composition and decomposition. In our case these are just simple linear layers, and that means that Self-StrAE is able to achieve these results using just a fraction of the parameters of the baslines. So we have a model that shows both promising performance as well as serious efficiency.

| Model | Self-StrAE | Bi-LSTM | RoBERTa |

|---|---|---|---|

| Params | 430 | 181,800 | 3,950,232 |

Banyan

Self-StrAE showed promise, but to make it really effective we had make a few changes, which we outline in our new paper Banyan. At the core are three modifications:

- Entangled Trees: Conceptually these are pretty simple, our model operates over a batch and that means we will be composing the same nodes multiple times (e.g. if ‘ate doughnuts’ occurs multiple times). This means that we can share the same nodes across different trees. This is a pretty simple change, but it has a big impact on the efficiency of the model, because we can reduce the memory footprint pretty radically. It also means that when we decompose we agregate the representations of the same node across different contexts which gives the model a more global view.

- Diagonal Functions: We switched from using linear layers to something even simpler. Diagonal functions mean that instead of using a full matrix we instead just use a vector of weights. This reduces the number of parameters and turns out to massively improve the performance of the model. This is because the initial representations need to be really expressive for reconstruction to succeed and the diagonal functions really encourage that.

- Switching to Cross-Entropy over the Vocabulary: Because the diagonal functions really force the model to learn expressive representations we can switch from using contrastive loss to instead simply predicting what the original input tokens were. This is a much simpler objective and it is actually more robust because it is grounded in the data and therefore avoids some of the convergence issues with contrastive loss.

The full details are in the paper, but the key point is that with a few simple changes we can make the model much more effective. To prove that we ran a series of experiments against some tougher baselines. We compared Self-StrAE with our new model Banyan as well as an eight layer RoBERTa transformer and GloVe vectors. We trained our models on 10 million tokens to make sure that we stay efficient while we let the baselines train on 100 million tokens so that they aren’t limited by lack of scale. For both the RoBERTa and the GloVe baselines we also include a tougher variant. For GloVe we do some manual augmentation to remove uninformative words from the sentence representation and for RoBERTa we do some extra SimCSE training to boost its representations performance. We also increased the embedding dimension to 256 in order to make things just that little bit more challenging for Banyan.

On the Sentence Level:

| Model | STS-12 | STS-13 | STS-14 | STS 15 | STS 16 | STS B | SemRel |

|---|---|---|---|---|---|---|---|

| Self-StrAE | 31.98 | 53.88 | 37.73 | 55.23 | 55.55 | 39.53 | 50.05 |

| GloVe | 31.61 | 21.69 | 27.37 | 40.42 | 29.27 | 28.25 | 41.20 |

| + stopword rm | 39.00 | 41.61 | 39.31 | 51.06 | 45.14 | 48.40 | 42.37 |

| RoBERTa | 42.77 | 51.70 | 45.67 | 63.67 | 59.60 | 39.97 | 52.73 |

| +SimCSE | 50.63 | 62.63 | 54.17 | 68.77 | 66.67 | 53.53 | 59.27 |

| Banyan | 51.20 | 69.10 | 63.20 | 73.20 | 66.60 | 61.50 | 61.60 |

On the Word Level:

| Model | Simlex | Wordsim-S | Wordsim-R |

|---|---|---|---|

| Self-StrAE | 13.80 | 54.38 | 52.85 |

| GloVe | 27.47 | 62.53 | 51.00 |

| RoBERTa | 29.23 | 61.97 | 46.00 |

| Banyan | 16.57 | 63.25 | 69.00 |

On Retrieval and Classification Tasks:

| Model | Quora R@1 | Quora R@10 | Arguana R@1 | Arguana R@10 | SST-2 | MRPC |

|---|---|---|---|---|---|---|

| Self-StrAE | 29.59 | 44.77 | 9.96 | 21.48 | 74.67 | 80.34 |

| GloVe | 26.08 | 43.17 | 6.18 | 24.68 | 75.83 | 81 |

| + stopword rm | 38.78 | 62.15 | 9.89 | 33.00 | 76.50 | 81 |

| RoBERTa | 37.67 | 58.78 | 8.18 | 28.85 | 75.68 | 81 |

| +SimCSE | 45.09 | 68.74 | 10.06 | 37.36 | 75.97 | 80.83 |

| Banyan | 50.19 | 75.80 | 27.41 | 49.60 | 77.20 | 79.57 |

Across the board Banyan signficantly outperforms our original Self-StrAE and is able to match and even generally exceed the performance of even these tougher baselines. Importantly we are able to do so while maintaining all the efficiency benefits from the original model. This means we have an effective and efficient learner and that can become real game changer in contexts where scale is not available.

A classic example of such a situation are low resource languages. There are many such cases where the scale to pre-train a big model simply isn’t available our the communities don’t have the compute resources to do so, and that means that a lot of NLP tasks simply aren’t possible. In fact its such an issue that a recent challenge was held to try and provide good embeddings for just these languages, and now we can really put Banyan to the test. This time we don’t compare against baselines we trained from scratch, but instead against large SoTA foundation models. These include the latest LLMs, pre-trained multilingual encoders and even specialised embedding models trained on supervised semantic datasets.

| Model | Spanish | Telugu | Marathi | Hindi | Amharic | Afrikaans |

|---|---|---|---|---|---|---|

| Llama-3.1 (8B) | 66.7 | 65.6 | 63.4 | 61.7 | 64.1 | 65.4 |

| Mistral Nemo | 66.2 | 57.0 | 52.3 | 55.8 | 53.2 | 58.3 |

| MiniLM-L12 | 58.8 | 34.8 | 39.5 | 43.8 | 9.60 | 74.1 |

| Paraphrase XLM-R | 71.7 | 58.1 | 79.6 | 52.0 | 64.6 | 76.8 |

| XLM-R | 68.9 | 46.3 | 55.7 | 52.7 | 57.3 | 56.2 |

| XLM-R (FT) | 72.8 | 68.8 | 75.1 | 57.6 | 59.6 | 72.6 |

| Banyan | 61.0 | 71.1 | 67.7 | 61.8 | 66.2 | 78.7 |

On low resource languages Banyan is really able to shine and often even outperforms the SoTA foundation models and supervised embedding models. It does so while learning fully from scratch with very little data and no supervision. We are able to pretrain from scratch in under an hour on a single GPU, and this can be performed using free compute from Google colab. Moreover, because the model is so light weight it can be easily inferenced on a laptops CPU which makes it really accessible. To underscore just how efficient Banyan is compared to the baselines we can look at the number of non-embedding parameters:

| Model | Banyan | Self-StrAE | Roberta (M) | MiniLM-L12 | XLMR | Llama 3.1 | Mistral Nemo |

|---|---|---|---|---|---|---|---|

| Params | 14 | 1072 | ≈10M | ≈21M | ≈85M | ≈8B | ≈12B |

Good and cheap embedding models are useful for many applications. For example, the digital humanities need to organise corpora of ancient languages, making it easier for researchers to access texts they need. But these corpora are small, and these languages are unlikely to be present in pretraining corpora of larger models. Banyan provides an efficient solution for producing representations for both these use cases and low resource languages and under represented communities more generally.